AWS S3 buckets have a well-earned reputation for being down right leaky.

Considering how widely used this useful object storage service is for developers, it has historically been fairly easy to misconfigure their security policies. And as case after case –– look at the recent Premier Diagnostics incident –– has shown, the results of an improperly secured S3 can have a significant negative impact on a company.

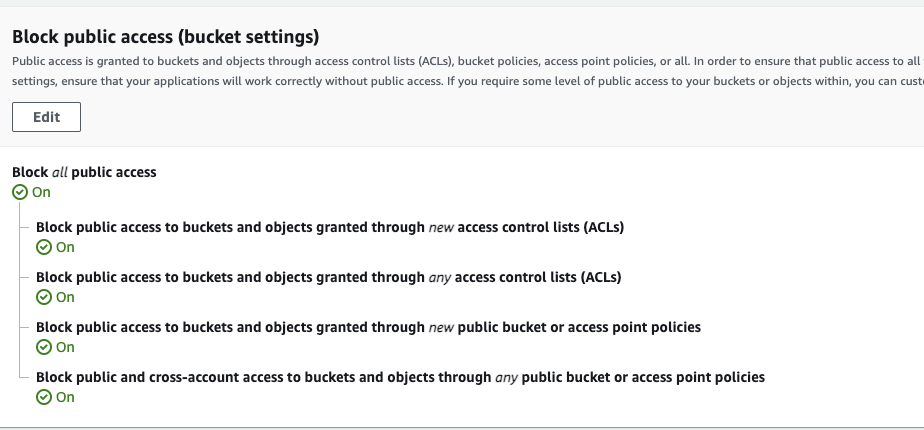

Thankfully, the crew over at AWS has made some pretty significant improvements in giving developers the tools they need to make their S3s much more secure. These measures are most visible in the way that they have gotten really good at blocking access to the buckets by default.

Currently when creating an item inside an S3 bucket through the AWS console, it is automatically blocked from public access.

That’s a great start.

AWS has gone the extra step in adding more precautions. Just in case you create an object that allows public access by mistake, the S3 bucket has an additional safety mechanism that blocks all public access. This is true even if you configure that specific item inside the bucket is accessible by an external user.

The controls here are automatic and you have to really go out of your way to make the stars align and end up with a leaky bucket.

With these great advancements in security in mind, let’s see how things are still going wrong.

Terraform — The Wild West to AWS’s Security Controls

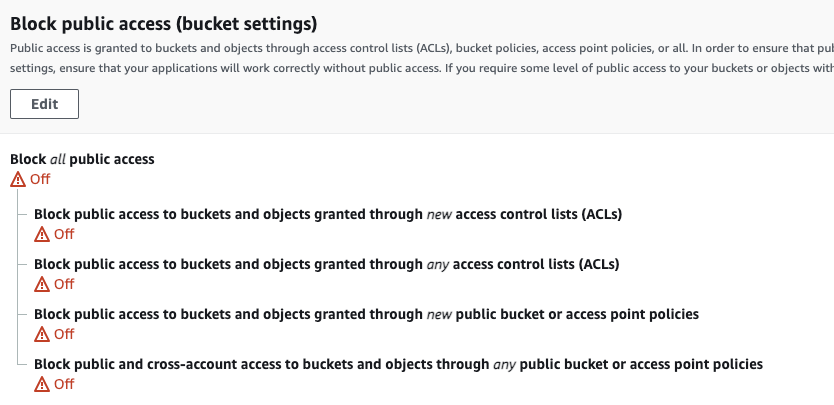

The automatic, double layered security protections laid out above are effective. Just so long as you are configuring your S3 on the AWS console.

Unfortunately, they do not appear to be very effective when the buckets are configured using a terraform.

In the course of our own development here at Solvo, we recently realized that our S3’s were showing that these security mechanisms were coming up false. This meant that the protection that we assumed that we had by default when were configuring our buckets in the AWS console were just not being brought over like we expected that they would.

resource "aws_s3_bucket" "my_bucket" {

bucket = “my-tf-test-bucket”

acl = “private”

tags = {

Name = “My bucket”

Environment = “Dev”

}

}

This was an unpleasant surprise to say the least. Especially since Solvo — like many others out there — uses terraform for creating many of our cloud infrastructure resources, following the Infrastructure as Code (IaC) model that has become increasingly popular in recent years.

Infrastructure as Code helps to solve a lot of problems facing developers and especially DevOps teams when it comes to quickly and efficiently spinning up infrastructure like VMs, load balancers, etc. Instead of attempting to manually configure a copy of the exact configuration from one deployment or environment to another, it makes perfect a lot of sense to simply do it from code. It’s repeatable, scalable, and leaves less room for errors that can end in security disasters. For a quick and helpful brief on why IaC is playing such a significant role, check out this brief from Microsoft.

So how did this challenge shake out? you.

How We Solved Our Terraform Issue

The fix here was both straightforward and a bit of a drag.

We basically went into our terraform and added an additional resource that turned the blocking policies back on.

resource "aws_s3_bucket_public_access_block" "my_bucket_access_block" {

bucket = aws_s3_bucket.my_bucket.id

block_public_acls = true

block_public_policy = true

ignore_public_acls = true

restrict_public_buckets = true

}

This was the good news.

The downside is that there wasn’t a way to automate this process, so we had to manually go through each form and add it ourselves.

Lessons for Configuring S3s Securely

By all appearances, there doesn’t seem to be any real reason that the good folks who developed the terraform at HashiCorp have dropped these protections. It was probably less of a decision and more of a good feature just not being carried over.

Maybe the lesson here is don’t expect every vendor that supports an ecosystem to cover every feature and tool in their own products. HashiCorp is not AWS and does a pretty stellar job of the things that they do.

As users of these products, we just need to be aware of their capabilities and limitations.

While it took some time, we successfully secured our infrastructure. Now we know to include this in our forms going forward, and so do you.